9 Questions on Information Theory

Thursday, February 13th, 2014 | Author: Konrad Voelkel

Back in 2010 I had a series of posts going about questions in information theory that arose from a 2-week seminar with a bunch of students coming from various scientific disciplines (a wonderful event!). Here I picked those that I still find particularly compelling:

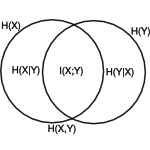

- Is the mathematical definition of Kullback-Leibler distance the key to understand different kinds of information?

- Can we talk about the total information content of the universe?

- Is hypercomputation possible?

- Can we tell for a physical system whether it is a Turing machine?

- Given the fact that every system is continually measured, is the concept of a closed quantum system (with unitary time evolution) relevant for real physics?

- Can we create or measure truly random numbers in nature, and how would we recognize that?

- Would it make sense to adapt the notion of real numbers to a limited (but not fixed) amount of memory?

- Can causality be defined without reference to time?

- Should we re-define “life”, using information-theoretic terms?

What do you think?